The Ultimate Big O Cheat Sheet: Understanding Algorithm Complexities

Big O notation is a mathematical concept that is often used in computer science and algorithms to describe the performance of a given algorithm. It is a way of expressing how the time or space complexity of an algorithm grows as the size of the input data increases. In this Big O cheat sheet, we will provide a comprehensive overview of Big O notation, including its definition, notation, and common examples.

What is Big-O ?

Big O notation is a way of expressing the upper bound of the time complexity of an algorithm. It describes how the running time of an algorithm increases as the size of the input data increases. In other words, it tells us how much time an algorithm will take to run, at worst case scenario.

The notation is often used to compare the performance of different algorithms, and it is a standard tool in the field of algorithm analysis.

Big-O Notation

Big O notation is typically expressed using a mathematical function, such as O(n), O(n^2), or O(log n). The letter “O” is used to indicate that we are talking about an upper bound on the running time of an algorithm. The function inside the parentheses, such as “n” or “n^2”, describes how the running time of the algorithm grows as the size of the input data increases.

For example, an algorithm that has a time complexity of O(n) will take linear time to run, while an algorithm that has a time complexity of O(n^2) will take quadratic time to run.

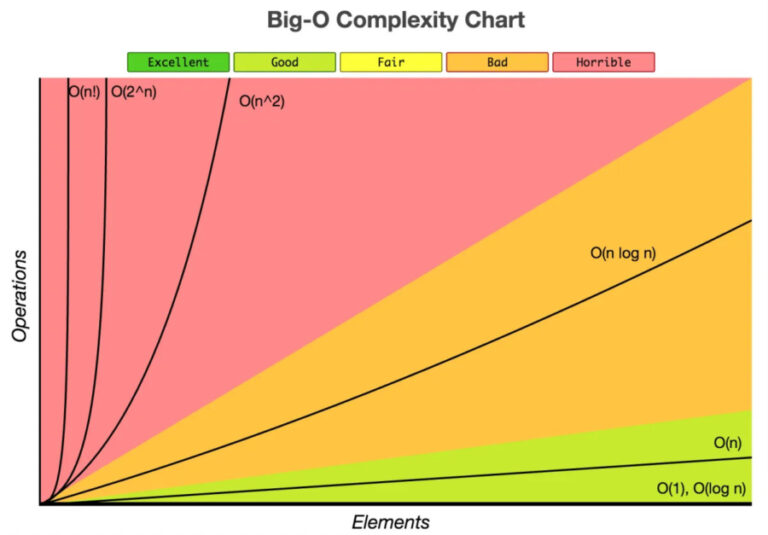

Big-O Complexity Chart

The different time and space complexities

Understanding time and space complexity is essential for optimizing the performance of your algorithms. Let’s take a closer look at some of the most common complexities you’ll encounter.

O(1) represents constant time complexity, which means that the algorithm’s running time is not affected by the size of the input. An example of an O(1) algorithm is looking up an element in an array by its index. No matter how big the array is, it will always take the same amount of time to find the element.

O(log n) represents logarithmic time complexity, which means that the running time of the algorithm increases at a slower rate as the size of the input increases. An example of an O(log n) algorithm is a binary search. The algorithm repeatedly divides the search space in half, so even as the array gets larger, the number of steps required to find the element will still be relatively small.

O(n) represents linear time complexity, which means that the running time of the algorithm increases at the same rate as the size of the input. An example of an O(n) algorithm is a simple linear search. The algorithm has to look at each element in the array one by one, so the larger the array, the longer it will take to find the element.

O(n log n) represents a slightly more complex linear-logarithmic time complexity, which is common in algorithms that combine a linear search with a logarithmic search. An example of an O(n log n) algorithm is a sorting algorithm like merge sort. It needs to divide the array into smaller parts logarithmically but also needs to merge them back linearly.

O(n^2) represents quadratic time complexity, which means that the running time of the algorithm increases at a much faster rate as the size of the input increases. An example of an O(n^2) algorithm is a nested loop. The algorithm has to look at each element in the array one by one, but also look at every other element in the array, so the larger the array, the longer it will take.

It’s important to note that these are just a few examples and that complexities can vary depending on the algorithm and the specific problem you’re trying to solve. But by understanding these basic complexities, you’ll be well on your way to optimizing your code and making it run as efficiently as possible.

Common Data Structures and Their Time and Space Complexities

| Data Structure | Insertion | Deletion | Search | Access | Space |

|---|---|---|---|---|---|

| Array | O(1) average, O(n) worst | O(n) average, O(n) worst | O(n) average, O(n) worst | O(1) average, O(1) worst | O(n) |

| Linked List | O(1) average, O(n) worst | O(n) average, O(n) worst | O(n) average, O(n) worst | O(n) average, O(n) worst | O(n) |

| Stack | O(1) average, O(1) worst | O(1) average, O(1) worst | O(n) average, O(n) worst | O(n) average, O(n) worst | O(n) |

| Queue | O(1) average, O(1) worst | O(1) average, O(1) worst | O(n) average, O(n) worst | O(n) average, O(n) worst | O(n) |

| Tree (Binary Search) | O(log n) average, O(n) worst | O(log n) average, O(n) worst | O(log n) average, O(n) worst | O(log n) average, O(n) worst | O(n) |

| Heap | O(log n) average, O(n) worst | O(log n) average, O(n) worst | O(n) average, O(n) worst | O(1) average, O(n) worst | O(n) |

| Hash Table | O(1) average, O(n) worst | O(1) average, O(n) worst | O(1) average, O(n) worst | O(1) average, O(n) worst | O(n) |

Note: The time and space complexities listed above are average and worst case scenarios, respectively. Also, these are general complexities and may vary based on specific implementation details and the use case scenario.

Other complexity notations

Theta Notation

Theta notation is used to describe the tight bound of the time complexity of an algorithm. It describes the average time it would take for an algorithm to run as the size of the input grows. Theta notation provides a more accurate representation of the running time as compared to Big O notation.

For example, if an algorithm has a time complexity of Θ(n), it means that the average time it would take to run the algorithm would be proportional to the size of the input.

Omega Notation

Omega notation is used to describe the lower bound of the time complexity of an algorithm. It describes the minimum time it would take for an algorithm to run as the size of the input grows. The lower bound provides a guarantee that the running time will not be less than the value described by the Omega notation.

For example, if an algorithm has a time complexity of Ω(n), it means that the minimum time it would take to run the algorithm would be proportional to the size of the input. In the best case scenario, the algorithm would take at least n steps to complete.

Algorithm Comparisons and Trade-offs for time complexity

When it comes to solving problems, there are often multiple algorithms that can get the job done. However, each algorithm has its own strengths and weaknesses in terms of time and space complexity. In this section, we’ll take a look at some common algorithms and compare their trade-offs.

Sorting Algorithms

One of the most common problems in computer science is sorting a list of items. There are several algorithms to choose from, each with its own time and space complexity.

Bubble Sort

Bubble sort is a simple and intuitive sorting algorithm. It works by repeatedly swapping adjacent elements if they are in the wrong order. This process continues until the list is sorted. Bubble sort has a time complexity of O(n^2) in the worst case, making it impractical for large lists. However, it is a good choice for small lists or for educational purposes.

Quick Sort

Quick sort is a popular and efficient sorting algorithm. It works by selecting a pivot element and partitioning the list around that pivot. The pivot is chosen so that the elements to the left are smaller and the elements to the right are larger. Quick sort then recursively sorts the left and right sublists. Quick sort has a time complexity of O(n log n) on average, making it much faster than bubble sort for large lists. However, it can be slower in the worst case, with a time complexity of O(n^2).

Merge Sort

Merge sort is another efficient sorting algorithm. It works by dividing the list into two halves, sorting each half, and then merging the two sorted halves together. Merge sort has a time complexity of O(n log n) in the best, worst, and average cases. This makes it a reliable choice for sorting large lists. The trade-off is that it requires more space than quick sort, with a space complexity of O(n).

Searching Algorithms

Another common problem in computer science is searching for an item in a list. There are several algorithms to choose from, each with its own time and space complexity.

Linear Search

Linear search is a simple and intuitive searching algorithm. It works by starting at the first item and checking each item in the list until the desired item is found. Linear search has a time complexity of O(n) in the worst case, meaning it can take a long time to search large lists. However, it is a good choice for small lists or for educational purposes.

Binary Search

Binary search is a popular and efficient searching algorithm. It works by dividing the list into two halves and checking if the desired item is in the left or right half. Binary search then repeats this process on the appropriate half until the desired item is found. Binary search has a time complexity of O(log n) in the worst case, making it much faster than linear search for large lists. However, it requires the list to be sorted, which adds an additional O(n log n) time complexity for sorting the list.

Trade-offs

When choosing an algorithm, it’s important to consider both time and space complexity. For example, quick sort is faster than merge sort on average, but it requires less space. On the other hand, merge sort is more reliable in terms of time complexity, but it requires more space. The right choice depends on the specific problem and the trade-offs that are acceptable.

Resources to learn more about big-O

- Introduction to Algorithms by Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein: https://www.amazon.com/Introduction-Algorithms-3rd-MIT-Press/dp/0262033844/

- Big O Cheat Sheet: https://www.bigocheatsheet.com/

- Time Complexity Explained with Animated Visualizations: https://www.youtube.com/watch?v=v4cd1O4zkGw

- Time and Space Complexity of Algorithms: https://medium.com/better-programming/time-and-space-complexity-of-algorithms-d7fcb0e0f3e3

- Coursera’s Algorithms and Data Structures course: https://www.coursera.org/courses/algorithms-and-data-structures

- HackerRank’s Big O section: https://www.hackerrank.com/domains/tutorials/big-o

- Competitive Programming Handbook: https://cses.fi/book/book.pdf

Conclusion

In this Big O cheat sheet, we explored various aspects of Big O notation, a measure of algorithm efficiency in terms of time and space complexity. We discussed the different time complexities (such as O(1), O(log n), O(n), O(n log n), O(n^2), etc.) with examples and use cases for each. Additionally, we went over common data structures (such as arrays, linked lists, trees, and graphs) and their corresponding time and space complexities for basic operations (such as insertion, deletion, and search). We also covered the relationship between Big O notation and other complexity measures like theta and omega notation, as well as the trade-offs between different algorithms for the same problem in terms of their time and space complexities. In conclusion, this article aimed to provide a comprehensive understanding of Big O notation and its applications in the analysis of algorithms.

Tools I use for this site

- I buy all my domain names on Namecheap, as thetrendycoder.com

- The hosting of this website is made on Bluehost.

- The website is created with WordPress.org (and not WordPress.com).

- I use the page builder Elementor because it makes it easy to create modern pages with drag and drop.

- I have multiple websites, and on most of them, I use themes from wpKoi. I love their design, they are very original and work well with Elementor.

- All the designs and images are created using canvas.

- I use Grammarly and languagetool to correct all my spelling and grammar mistakes.

- SEO is a big thing on a website, I use a WordPress plugin called YoastSEO to help me with the basic analysis. I also use a tool called Keysearch for choosing the right keywords.

- To handle affiliate links, I use two platforms: impact and ShareASale.

You want to write on TheTrendyCoder ?

If you are interested in publishing guest articles on this website, sharing your experience or coding tutorials, apply through this form.

NO EXPERIENCE needed!

NO PERFECT English needed!

NO DEGREE needed!

NO AGE limits!

No matter at what stage we are in our tech journey, we all have learned things and experienced things. Sharing them can help others and even help us. So, if you are a student, a professional, or a self-taught coder, feel at home and share some of your knowledge with the community.